Community docs: disaster recovery

Definition of a disaster¶

Test scenario¶

10,453,901 duplicati-b69a2a32a50bb4c6d8780389efdbf7442.dblock.zip.aes

8,173 duplicati-i84de11dd9a334727a080a3cdedc11f76.dindex.zip.aes

10,409,309 duplicati-bb1e603b91cae420787ed855d40e7cc04.dblock.zip.aes

9,677 duplicati-i77fdd0fa598d49fa93c5fedf3dbf4003.dindex.zip.aes

10,408,733 duplicati-b8fd38dcd303c4bcdb65dc15611f9b13b.dblock.zip.aes

8,317 duplicati-id6042b5ed9c34faa86e41fd3bcff72d2.dindex.zip.aes

10,465,421 duplicati-bb1c167fdb8ef46e6a83fe1d5b8b33cbf.dblock.zip.aes

6,765 duplicati-i1070213c1cea4844b3ace60c305854de.dindex.zip.aes

10,484,045 duplicati-b2b4fb88d1edd4eccade6b0ea6fdbfcf3.dblock.zip.aes

11,341 duplicati-i0c85219ca5764fb183b4306e65ed2034.dindex.zip.aes

10,472,541 duplicati-bc70688944c1b4875b7561e8046dd582d.dblock.zip.aes

8,301 duplicati-i726df1085487421b98bf9786e40d045f.dindex.zip.aes

10,384,701 duplicati-bf43697c750e746aead28ceb71af19359.dblock.zip.aes

9,101 duplicati-ie084e3c9380847a8a01acefcb8245fe3.dindex.zip.aes

10,392,317 duplicati-bf797fdce00794d0dbeb31de1f3867240.dblock.zip.aes

7,501 duplicati-i91b0156c9ced43bda26b6cef88f969b3.dindex.zip.aes

1,754,637 duplicati-b13a41763d40e4001911fd6f5d5d6c53d.dblock.zip.aes

3,709 duplicati-id79f5ccc6cb54f5faa7bbcf72c8e7428.dindex.zip.aes

5,133 duplicati-20171109T100606Z.dlist.zip.aes

10,415,597 duplicati-bb4cb32561132426eba2e190089585362.dblock.zip.aes

8,125 duplicati-ifd7c3d7bed47403197515b40821075fc.dindex.zip.aes

10,433,469 duplicati-b385c55aa15bd403e9fcb5a321339e76a.dblock.zip.aes

6,909 duplicati-i59e5c4064b3d422995200772bd267645.dindex.zip.aes

10,447,373 duplicati-b4e0bcd6b8c0b4d648a97e53c32550cce.dblock.zip.aes

8,829 duplicati-i21d3fcafc53b42bfa0dfe4cfdcc6a0d9.dindex.zip.aes

10,425,485 duplicati-b34811487cc1843e289ac577b6a7a8533.dblock.zip.aes

8,093 duplicati-i92dcf425b2d14c8780c2938f84e2bc2c.dindex.zip.aes

8,052,173 duplicati-bc385594379874159b0863dca12818ac7.dblock.zip.aes

8,621 duplicati-ib02c8a76143440b99ec94773a7b00c90.dindex.zip.aes

6,829 duplicati-20171109T100653Z.dlist.zip.aes

10,397,085 duplicati-b836b265755ff41ae908ef64e551ec63b.dblock.zip.aes

8,717 duplicati-i0903704f50ec4277a0cb6e224bde49cb.dindex.zip.aes

10,404,509 duplicati-bc230fc2ccec54a33b2035fcbc0231ce4.dblock.zip.aes

7,741 duplicati-i53b454d535de49e1983364b97bd681a1.dindex.zip.aes

10,463,597 duplicati-b54b864868bf341bcb88bff9ad786b8a3.dblock.zip.aes

4,365 duplicati-id1e3d59e5e3e48f59a6fbd7f46b4bb8c.dindex.zip.aes

10,413,933 duplicati-b2b06934c5d764025a6965f1749d86a90.dblock.zip.aes

11,421 duplicati-ifd2b08eaf75b43748739c5a140cb1267.dindex.zip.aes

10,468,957 duplicati-b5f8cd40e22a54b5b988689370b8cde34.dblock.zip.aes

6,749 duplicati-ib9a135574fa84222beea313ac583463b.dindex.zip.aes

10,383,805 duplicati-bfa5ecd54953f455496a67b089e2ad35b.dblock.zip.aes

10,301 duplicati-i19aa26f9c15e432ea5114c93acd52661.dindex.zip.aes

4,505,165 duplicati-bd14cf57e975040808ac8d0f4bd9d5e36.dblock.zip.aes

4,797 duplicati-id08a17cbe0a04407b4be37d2c48e8ab9.dindex.zip.aes

8,749 duplicati-20171109T100737Z.dlist.zip.aes

10,483,357 duplicati-be6e935d55f0b443b8c716c83aebccb93.dblock.zip.aes

9,885 duplicati-ic209185103c045bb87a680e98b78269b.dindex.zip.aes

974,909 duplicati-b7fa18a6f863a42fea5f210cc5f1416e5.dblock.zip.aes

2,125 duplicati-i57ce5109be6c4441998fc2bfb2cd0f3a.dindex.zip.aes

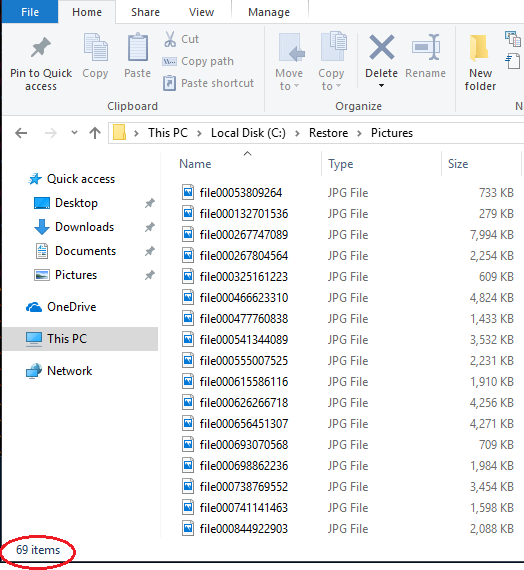

9,901 duplicati-20171109T100815Z.dlist.zip.aesInventory of files that are going to be corrupted¶

Making the backup inconsistent¶

Prerequisites for recovery¶

Recovering by purging files¶

Recovering by using the Duplicati Recovery tool¶

Downloading all remote files using the Recovery Tool¶

Creating an index of downloaded files using the Recovery Tool¶

List backup versions and files using the Recovery Tool¶

Restoring files using the Recovery Tool¶

Last updated

Was this helpful?